5 Extraction Fixes

Introduction to Extraction Fixes

Extracting data from various sources can be a challenging task, especially when dealing with large datasets or complex file formats. Extraction fixes are essential in ensuring that the extracted data is accurate, complete, and consistent. In this article, we will discuss five extraction fixes that can help improve the quality of extracted data.

Fix 1: Handling Missing Values

One of the most common issues encountered during data extraction is missing values. Missing values can occur due to various reasons such as incomplete data, incorrect data entry, or data corruption. To handle missing values, it is essential to implement a robust data validation and cleaning process. This can include checking for missing values, identifying the cause of the missing values, and imputing the missing values using appropriate methods such as mean, median, or imputation using machine learning algorithms.

Fix 2: Data Standardization

Data standardization is critical in ensuring that the extracted data is consistent and accurate. Data standardization involves converting data into a standard format to ensure that it can be easily compared and analyzed. This can include standardizing date formats, converting data types, and handling inconsistencies in data formatting. By standardizing data, we can reduce errors and improve the overall quality of the extracted data.

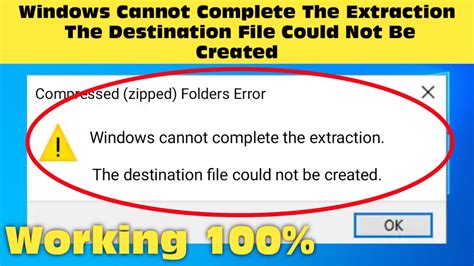

Fix 3: Error Handling

Error handling is an essential aspect of data extraction. Error handling involves identifying and handling errors that occur during the data extraction process. This can include handling errors such as invalid data, data corruption, or system errors. By implementing robust error handling mechanisms, we can minimize the impact of errors on the extracted data and ensure that the data is accurate and reliable.

Fix 4: Data Validation

Data validation is critical in ensuring that the extracted data is accurate and consistent. Data validation involves checking the extracted data against a set of rules and constraints to ensure that it meets the required standards. This can include checking for data type consistency, range checks, and format checks. By validating data, we can identify and correct errors, and ensure that the extracted data is of high quality.

Fix 5: Data Quality Monitoring

Data quality monitoring is essential in ensuring that the extracted data meets the required standards. Data quality monitoring involves continuously monitoring the extracted data to identify any issues or errors. This can include monitoring data quality metrics such as data completeness, data consistency, and data accuracy. By monitoring data quality, we can identify areas for improvement and implement corrective actions to ensure that the extracted data is of high quality.

📝 Note: It is essential to implement these extraction fixes to ensure that the extracted data is accurate, complete, and consistent.

In summary, extraction fixes are critical in ensuring that the extracted data is of high quality. By implementing these fixes, we can improve the accuracy, completeness, and consistency of the extracted data, and ensure that it meets the required standards. Whether you are working with large datasets or complex file formats, these extraction fixes can help you achieve your data extraction goals.

What is data extraction?

+

Data extraction is the process of retrieving data from various sources, such as databases, files, or websites, and converting it into a format that can be used for analysis or other purposes.

Why is data standardization important?

+

Data standardization is important because it ensures that the extracted data is consistent and accurate, and can be easily compared and analyzed.

How can I handle missing values in my data?

+

You can handle missing values by implementing a robust data validation and cleaning process, which includes checking for missing values, identifying the cause of the missing values, and imputing the missing values using appropriate methods.